一、配置

1、opencv

#安装依赖

1 | sudo apt-get install libvtk5-dev |

#安装OpenCV 2.4.13

1 | git clone https://github.com/opencv/opencv |

注:opencv的下载过程非常慢

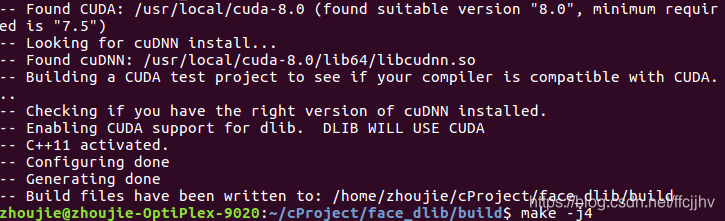

2、dlib(GPU版)

需要提前安装驱动、cuda 与 cudnn

进入官网:http://dlib.net/ ,点击左下角Download dlib ver.19.17 ,下载后解压。

进入dlib根目录下

1 | mkdir build && cd build |

注:会自动检查满足安装gpu版条件,注意命令行提示信息

二、Kinect v1接入

1、安装OpenNI2

1 | git clone https://github.com/occipital/OpenNI2.git |

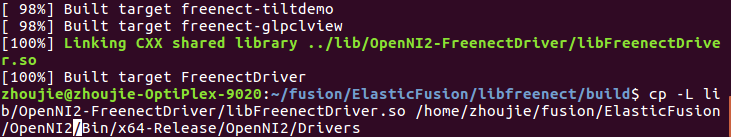

2、安装libFreenect

1 | git clone https://github.com/OpenKinect/libfreenect |

打开libfreenect/CMakeLists.txt,在33行cmake_minimum_required(VERSION 2.8.12)下一行添加

1 | add_definitions(-std=c++11) |

保存后关闭,命令行继续执行

1 | mkdir build && cd build |

注:${OPENNI2_DIR}是OpenNI2的解压文件夹,比如我的在ElasticFusion文件夹,则

打开libfreenect文件夹,运行

1 | sudo cp platform/linux/udev/51-kinect.rules /etc/udev/rules.d |

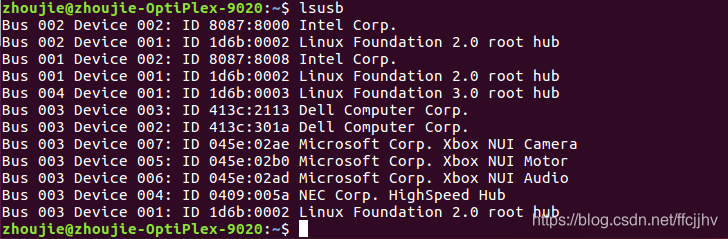

重启后,插上kinect后,命令行运行clsusb,查看是否包含:Xbox camera,Xbox motor,Xbox audio

二、代码运行

1、CMakeList.txt修改

#CMakeList.txt

1 | cmake_minimum_required(VERSION 2.8.4) |

将OPENNI2_PATH与dlib路径修改为自己的。

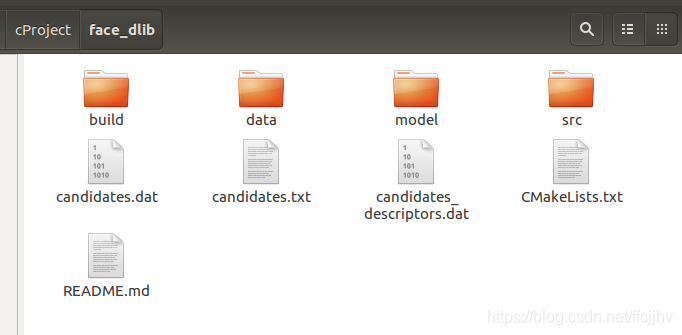

2、数据+模型下载

下载 shape_predictor_68_face_landmarks.dat 和 dlib_face_recognition_resnet_model_v1.dat 放置到model文件夹中

链接:https://pan.baidu.com/s/1jIoW6BSa5nkGWNipL7sxVQ

其中包括:

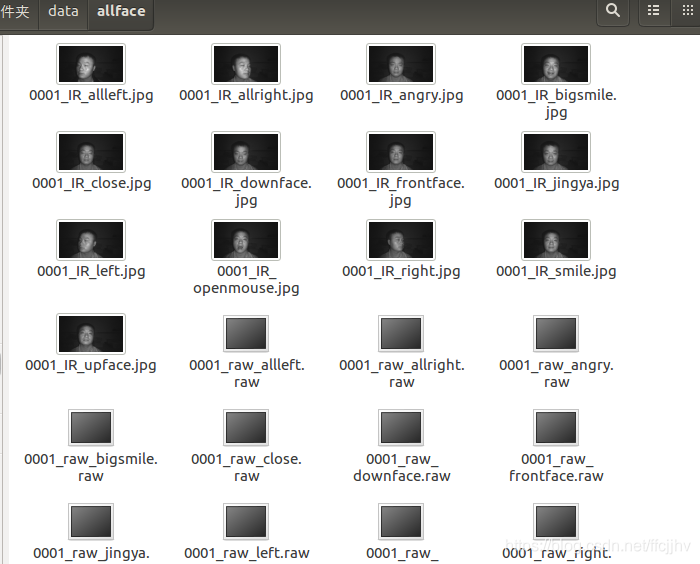

- candidate-face.zip(人脸库:包含29个正面人脸红外图)

- allface.zip(测试人脸集:包括29个人,每人13种脸部姿态下的红外图与深度图)

- shape_predictor_68_face_landmarks.dat(人脸68关键点检测器)

- dlib_face_recognition_resnet_model_v1.dat(人脸识别模型)

3、代码运行

将train_candidate.cpp 、this_is_who 和 this_is_who_kinect_one.cpp置于src文件夹中。

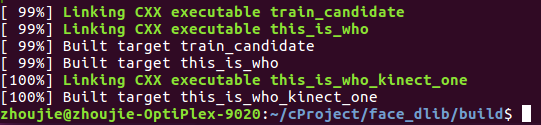

#build

1 | mkdir build && cd build |

cmake时检查是否适用dlib(GPU)版

最终在build文件夹中生成多个可执行文件

将人脸图片重命名为人的名字,放在data文件夹中。

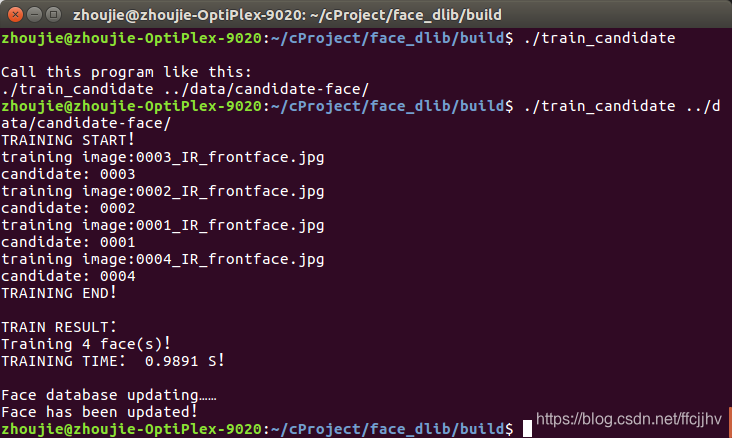

1、运行train_candidate 获得人脸库特征信息,存储在candidates_descriptors.dat 与 candidates.dat 中,同时生成candidates.txt,便于查看候选人信息。每次修改人脸库,只需运行train_candidate,完成人脸信息的更新。

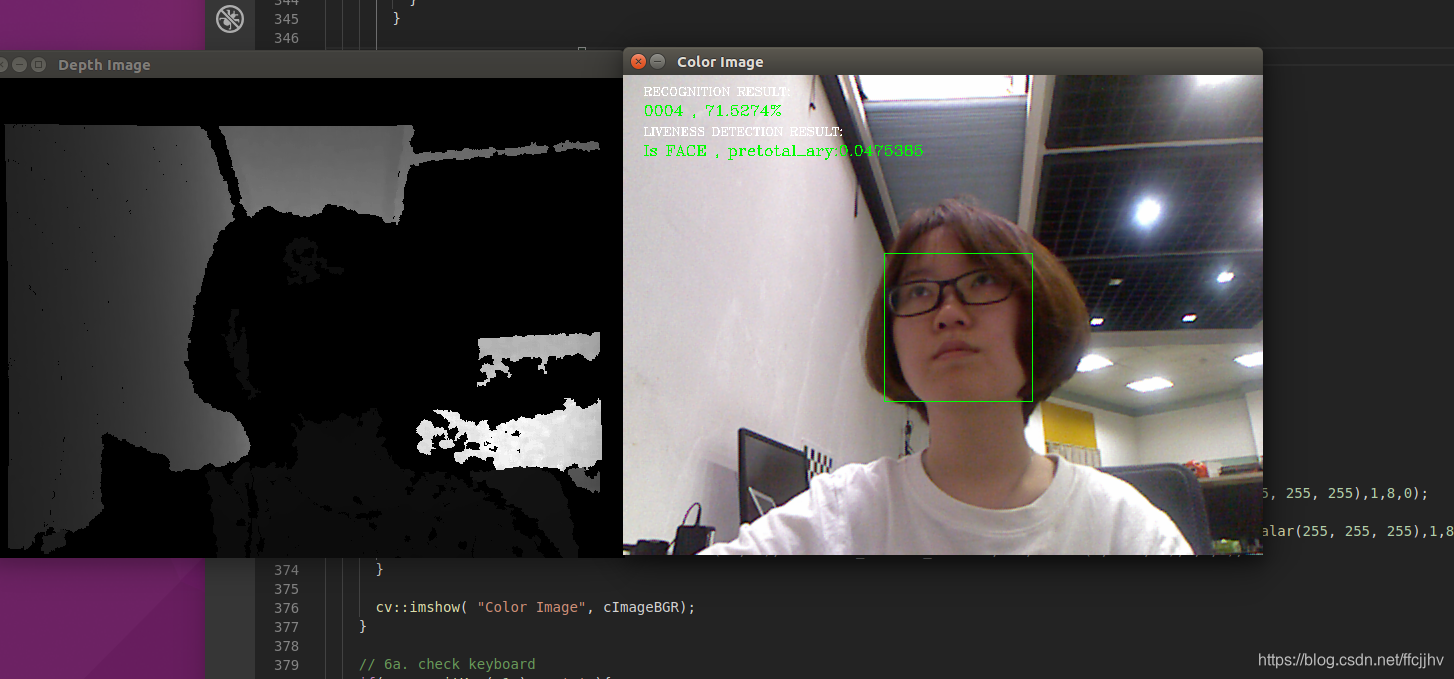

2、运行this_is_who_kinect_one,实时获取Kinect深度图与彩色图,并进行人脸识别与活体检测。

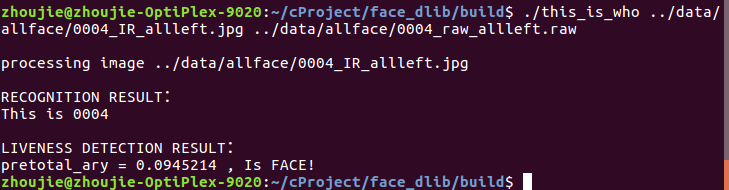

3、 不使用kinect v1实时接入,在离线数据上测试,运行this_is_who,使用data/allface中的深度图与红外图,并进行人脸识别与活体检测。

代码部分:

#train_candidate

1 |

|

#this_is_who_kinect_one.cpp

1 |

|

#this_is_who.cpp

1 |

|

代码比较粗糙,应该以后也不会再改了……